# Copyright 2021 NVIDIA Corporation. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ================================

Taking the Next Step with Merlin Models: Define Your Own Architecture#

This notebook is created using the latest stable merlin-tensorflow container.

In Iterating over Deep Learning Models using Merlin Models, we conducted a benchmark of standard and deep learning-based ranking models provided by the high-level Merlin Models API. The library also includes the standard components of deep learning that let recsys practitioners and researchers to define custom models, train and export them for inference.

In this example, we combine pre-existing blocks and demonstrate how to create the DLRM architecture.

Learning objectives#

Understand the building blocks of Merlin Models

Define a model architecture from scratch

Introduction to Merlin-models core building blocks#

The Block is the core abstraction in Merlin Models and is the class from which all blocks inherit.

The class extends the tf.keras.layers.Layer base class and implements a number of properties that simplify the creation of custom blocks and models. These properties include the Schema object for determining the embedding dimensions, input shapes, and output shapes. Additionally, the Block has a ModelContext instance to store and retrieve public variables and share them with other blocks in the same model as additional meta-data.

Before deep-diving into the definition of the DLRM architecture, let’s start by listing the core components you need to know to define a model from scratch:

Features Blocks#

They include input blocks to process various inputs based on their types and shapes. Merlin Models supports three main blocks:

EmbeddingFeatures: Input block for embedding-lookups for categorical features.SequenceEmbeddingFeatures: Input block for embedding-lookups for sequential categorical features (3D tensors).ContinuousFeatures: Input block for continuous features.

Transformations Blocks#

They include various operators commonly used to transform tensors in various parts of the model, such as:

ToDense: It takes a dictionary of raw input tensors and transforms the sparse tensors into dense tensors.L2Norm: It takes a single or a dictionary of hidden tensors and applies an L2-normalization along a given axis.LogitsTemperatureScaler: It scales the output tensor of predicted logits to lower the model’s confidence.

Aggregations Blocks#

They include common aggregation operations to combine multiple tensors, such as:

ConcatFeatures: Concatenate dictionary of tensors along a given dimension.StackFeatures: Stack dictionary of tensors along a given dimension.CosineSimilarity: Calculate the cosine similarity between two tensors.

Connects Methods#

The base class Block implements different connects methods that control how to link a given block to other blocks:

connect: Connect the block to other blocks sequentially. The output is a tensor returned by the last block.connect_branch: Link the block to other blocks in parallel. The output is a dictionary containing the output tensor of each block.connect_with_shortcut: Connect the block to other blocks sequentially and apply a skip connection with the block’s output.connect_with_residual: Connect the block to other blocks sequentially and apply a residual sum with the block’s output.

Prediction Tasks#

Merlin Models introduces the PredictionTask layer that defines the necessary blocks and transformation operations to compute the final prediction scores. It also provides the default loss and metrics related to the given prediction task.

Merlin Models supports the core tasks: BinaryClassificationTask, MultiClassClassificationTask, andRegressionTask. In addition to the preceding tasks, Merlin Models provides tasks that are specific to recommender systems: NextItemPredictionTask, and ItemRetrievalTask.

Implement the DLRM model with MovieLens-1M data#

Now that we have introduced the core blocks of Merlin Models, let’s take a look at how we can combine them to define the DLRM architecture:

import os

import tensorflow as tf

import merlin.models.tf as mm

from merlin.datasets.entertainment import get_movielens

from merlin.schema.tags import Tags

2022-10-19 20:45:41.774416: I tensorflow/core/util/util.cc:169] oneDNN custom operations are on. You may see slightly different numerical results due to floating-point round-off errors from different computation orders. To turn them off, set the environment variable `TF_ENABLE_ONEDNN_OPTS=0`.

2022-10-19 20:45:44.478032: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudnn.so.8'; dlerror: libcudnn.so.8: cannot open shared object file: No such file or directory

2022-10-19 20:45:44.478054: W tensorflow/core/common_runtime/gpu/gpu_device.cc:1850] Cannot dlopen some GPU libraries. Please make sure the missing libraries mentioned above are installed properly if you would like to use GPU. Follow the guide at https://www.tensorflow.org/install/gpu for how to download and setup the required libraries for your platform.

Skipping registering GPU devices...

2022-10-19 20:45:44.509373: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 AVX512F AVX512_VNNI FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

We use the get_movielens function to download, extract, and preprocess the MovieLens 1M dataset:

DATA_FOLDER = os.getenv("DATA_FOLDER", "workspace/data")

train, valid = get_movielens(variant="ml-1m")

/home/alaiacano/.pyenv/versions/3.8.10/envs/merlin38/lib/python3.8/site-packages/merlin/io/dataset.py:251: UserWarning: Initializing an NVTabular Dataset in CPU mode.This is an experimental feature with extremely limited support!

warnings.warn(

/home/alaiacano/.pyenv/versions/3.8.10/envs/merlin38/lib/python3.8/site-packages/merlin/schema/tags.py:148: UserWarning: Compound tags like Tags.USER_ID have been deprecated and will be removed in a future version. Please use the atomic versions of these tags, like [<Tags.USER: 'user'>, <Tags.ID: 'id'>].

warnings.warn(

/home/alaiacano/.pyenv/versions/3.8.10/envs/merlin38/lib/python3.8/site-packages/merlin/schema/tags.py:148: UserWarning: Compound tags like Tags.ITEM_ID have been deprecated and will be removed in a future version. Please use the atomic versions of these tags, like [<Tags.ITEM: 'item'>, <Tags.ID: 'id'>].

warnings.warn(

We display the first five rows of the validation data and use them to check the outputs of each building block:

valid.head()

| userId | movieId | title | genres | gender | age | occupation | zipcode | TE_age_rating | TE_gender_rating | TE_occupation_rating | TE_zipcode_rating | TE_movieId_rating | TE_userId_rating | rating_binary | rating | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 624 | 773 | 772 | [2] | 1 | 2 | 8 | 32 | 0.568176 | -0.570641 | -0.087290 | 1.003093 | 0.057056 | 2.006259 | 1 | 4.0 |

| 1 | 1957 | 22 | 22 | [1] | 1 | 4 | 9 | 449 | 0.782921 | -0.549865 | 0.623558 | 0.068490 | 1.102673 | 0.660300 | 1 | 5.0 |

| 2 | 1163 | 71 | 71 | [3, 5] | 1 | 1 | 4 | 659 | -0.544132 | -0.555616 | -0.111193 | -0.621290 | 0.414818 | 0.905115 | 0 | 2.0 |

| 3 | 2569 | 327 | 327 | [1, 6] | 1 | 1 | 12 | 388 | -0.505204 | -0.549865 | 1.310142 | -1.092174 | 0.122365 | -0.706886 | 0 | 3.0 |

| 4 | 649 | 1137 | 1139 | [9, 4] | 1 | 1 | 11 | 509 | -0.505204 | -0.549865 | 1.438719 | -0.622696 | -1.186149 | -0.500930 | 0 | 3.0 |

We convert the first five rows of the valid dataset to a batch of input tensors:

batch = mm.sample_batch(valid, batch_size=5, shuffle=False, include_targets=False)

batch["userId"]

<tf.Tensor: shape=(5, 1), dtype=int32, numpy=

array([[ 624],

[1957],

[1163],

[2569],

[ 649]], dtype=int32)>

Define the inputs block#

For the sake of simplicity, let’s create a schema with a subset of the following continuous and categorical features:

sub_schema = train.schema.select_by_name(

[

"userId",

"movieId",

"title",

"gender",

"TE_zipcode_rating",

"TE_movieId_rating",

"rating_binary",

]

)

We define the continuous layer based on the schema:

continuous_block = mm.ContinuousFeatures.from_schema(sub_schema, tags=Tags.CONTINUOUS)

We display the output tensor of the continuous block by using the data from the first batch. We can see the raw tensors of the continuous features:

continuous_block(batch)

{'TE_zipcode_rating': <tf.Tensor: shape=(5, 1), dtype=float32, numpy=

array([[ 1.0030926 ],

[ 0.06849001],

[-0.6212898 ],

[-1.092174 ],

[-0.6226964 ]], dtype=float32)>,

'TE_movieId_rating': <tf.Tensor: shape=(5, 1), dtype=float32, numpy=

array([[ 0.05705612],

[ 1.1026733 ],

[ 0.41481796],

[ 0.12236513],

[-1.1861489 ]], dtype=float32)>}

We connect the continuous block to a MLPBlock instance to project them into the same dimensionality as the embedding width of categorical features:

deep_continuous_block = continuous_block.connect(mm.MLPBlock([64]))

deep_continuous_block(batch).shape

TensorShape([5, 64])

We define the categorical embedding block based on the schema:

embedding_block = mm.EmbeddingFeatures.from_schema(sub_schema)

We display the output tensor of the categorical embedding block using the data from the first batch. We can see the embeddings tensors of categorical features with a default dimension of 64:

embeddings = embedding_block(batch)

embeddings.keys(), embeddings["userId"].shape

(dict_keys(['userId', 'movieId', 'title', 'gender']), TensorShape([5, 64]))

Let’s store the continuous and categorical representations in a single dictionary using a ParallelBlock instance:

dlrm_input_block = mm.ParallelBlock(

{"embeddings": embedding_block, "deep_continuous": deep_continuous_block}

)

print("Output shapes of DLRM input block:")

for key, val in dlrm_input_block(batch).items():

print("\t%s : %s" % (key, val.shape))

Output shapes of DLRM input block:

userId : (5, 64)

movieId : (5, 64)

title : (5, 64)

gender : (5, 64)

deep_continuous : (5, 64)

By looking at the output, we can see that the ParallelBlock class applies embedding and continuous blocks, in parallel, to the same input batch. Additionally, it merges the resulting tensors into one dictionary.

Define the interaction block#

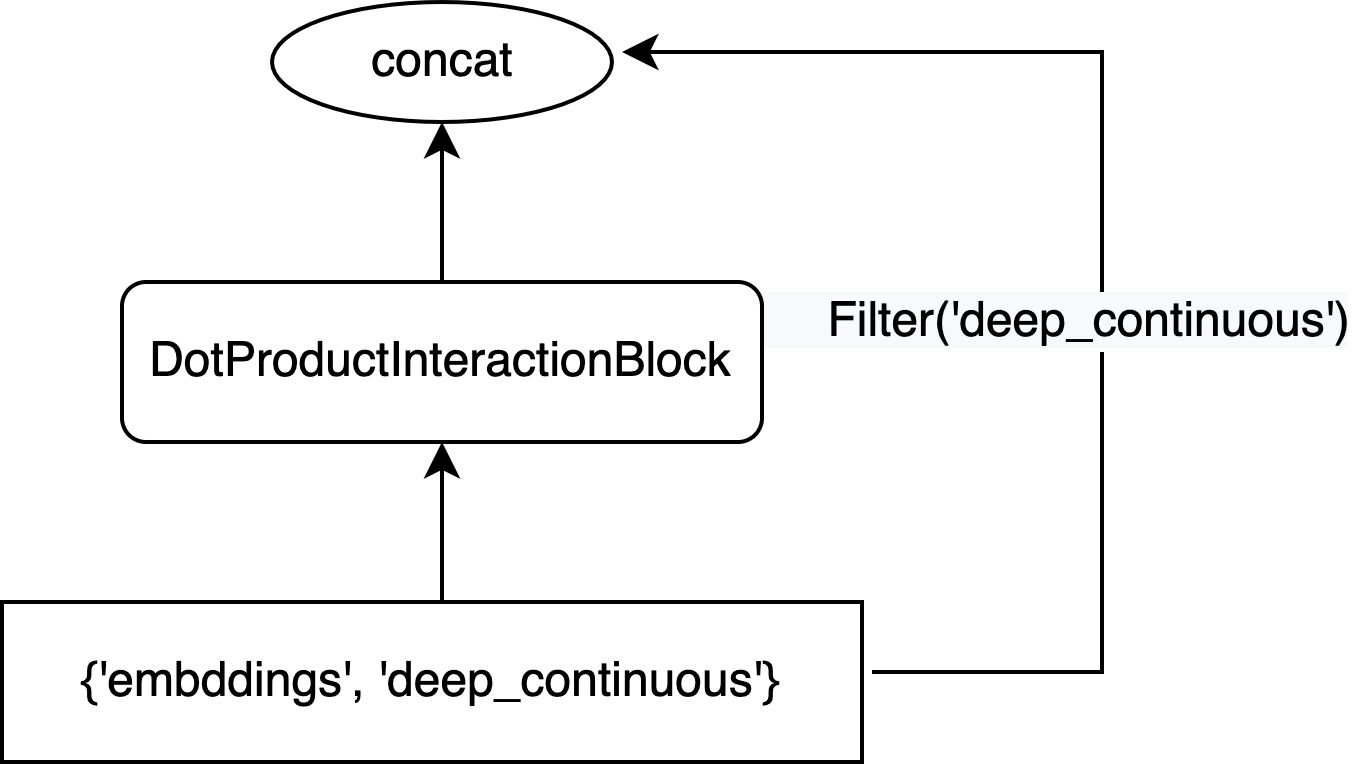

Now that we have a vector representation of each input feature, we will create the DLRM interaction block. It consists of three operations:

Apply a dot product between all continuous and categorical features to learn pairwise interactions.

Concat the resulting pairwise interaction with the deep representation of conitnuous features (skip-connection).

Apply an

MLPBlockwith a series of dense layers to the concatenated tensor.

First, we use the connect_with_shortcut method to create first two operations of the DLRM interaction block:

from merlin.models.tf.blocks.dlrm import DotProductInteractionBlock

dlrm_interaction = dlrm_input_block.connect_with_shortcut(

DotProductInteractionBlock(), shortcut_filter=mm.Filter("deep_continuous"), aggregation="concat"

)

The Filter operation allows us to select the deep_continuous tensor from the dlrm_input_block outputs.

The following diagram provides a visualization of the operations that we constructed in the dlrm_interaction object.

dlrm_interaction(batch)

<tf.Tensor: shape=(5, 74), dtype=float32, numpy=

array([[ 0.00000000e+00, 6.82826638e-02, 0.00000000e+00,

0.00000000e+00, 1.80383995e-01, 5.98768219e-02,

2.09976867e-01, 1.44710079e-01, 1.28024677e-02,

8.87025297e-02, 9.24035460e-02, 1.57566026e-01,

1.41247764e-01, 7.41617829e-02, 1.01561561e-01,

0.00000000e+00, 5.23385704e-02, 0.00000000e+00,

2.92576909e-01, 0.00000000e+00, 1.73170507e-01,

2.37197266e-03, 0.00000000e+00, 0.00000000e+00,

2.43107140e-01, 1.05558299e-01, 0.00000000e+00,

1.96579084e-01, 0.00000000e+00, 1.02332458e-01,

0.00000000e+00, 2.33163089e-01, 1.00891396e-01,

0.00000000e+00, 1.51944682e-01, 9.00887847e-02,

0.00000000e+00, 1.43880740e-01, 2.64524519e-01,

2.50956304e-02, 1.80838302e-01, 0.00000000e+00,

2.35574916e-01, 0.00000000e+00, 1.50875002e-01,

0.00000000e+00, 0.00000000e+00, 0.00000000e+00,

2.94353068e-01, 9.61890519e-02, 0.00000000e+00,

0.00000000e+00, 3.77075449e-02, 9.26294252e-02,

2.31126264e-01, 0.00000000e+00, 1.36887029e-01,

0.00000000e+00, 0.00000000e+00, 1.97133660e-01,

8.71547014e-02, 0.00000000e+00, 0.00000000e+00,

8.77225325e-02, 5.43038687e-03, 5.40966317e-02,

2.10780278e-02, 6.36962652e-02, -4.73170634e-03,

4.31833440e-04, -2.61518750e-02, 5.78600273e-04,

3.22858384e-03, -1.12014860e-02],

[ 0.00000000e+00, 0.00000000e+00, 6.47013634e-02,

0.00000000e+00, 0.00000000e+00, 2.34879330e-01,

0.00000000e+00, 0.00000000e+00, 2.98461556e-01,

1.75109744e-01, 3.08581203e-01, 2.01059490e-01,

0.00000000e+00, 2.86171734e-01, 1.20401457e-01,

3.16995412e-01, 8.87140930e-02, 0.00000000e+00,

0.00000000e+00, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 7.17573091e-02, 0.00000000e+00,

0.00000000e+00, 0.00000000e+00, 2.40364641e-01,

0.00000000e+00, 2.17864458e-02, 2.50810742e-01,

1.97104260e-01, 0.00000000e+00, 1.60598055e-01,

0.00000000e+00, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 0.00000000e+00, 8.58442709e-02,

2.45450616e-01, 2.51366884e-01, 0.00000000e+00,

2.41251349e-01, 5.72509654e-02, 1.84434533e-01,

1.03250876e-01, 7.17810914e-02, 1.37276351e-01,

0.00000000e+00, 0.00000000e+00, 2.95059383e-01,

0.00000000e+00, 0.00000000e+00, 1.27920657e-01,

2.05035344e-01, 0.00000000e+00, 2.36066252e-01,

4.05495577e-02, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, -2.38138139e-02, 1.08807432e-02,

7.77303847e-03, -8.50100294e-02, 4.98681329e-03,

1.11919511e-02, 2.63783004e-04, -1.12807564e-02,

-1.01519739e-02, 1.61713101e-02],

[ 0.00000000e+00, 0.00000000e+00, 1.57255560e-01,

8.98111165e-02, 0.00000000e+00, 5.74663691e-02,

0.00000000e+00, 0.00000000e+00, 1.13988757e-01,

1.43191861e-02, 6.66051209e-02, 0.00000000e+00,

0.00000000e+00, 6.92329630e-02, 0.00000000e+00,

2.41790712e-01, 2.46394938e-03, 7.98416510e-02,

0.00000000e+00, 7.82607049e-02, 0.00000000e+00,

0.00000000e+00, 1.71624459e-02, 1.06490739e-01,

0.00000000e+00, 0.00000000e+00, 1.72846913e-01,

0.00000000e+00, 1.03909791e-01, 3.22300903e-02,

5.67845404e-02, 0.00000000e+00, 3.74426208e-02,

2.37422630e-01, 0.00000000e+00, 7.47651095e-03,

1.13228727e-02, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 0.00000000e+00, 4.96021621e-02,

0.00000000e+00, 2.84084052e-01, 0.00000000e+00,

1.15308307e-01, 1.02194354e-01, 2.14390516e-01,

0.00000000e+00, 0.00000000e+00, 1.53718740e-01,

0.00000000e+00, 0.00000000e+00, 6.09191619e-02,

0.00000000e+00, 2.89969984e-03, 0.00000000e+00,

2.68034309e-01, 8.19996595e-02, 0.00000000e+00,

0.00000000e+00, 6.74452633e-02, 6.79842429e-03,

0.00000000e+00, -4.81778830e-02, -1.77667178e-02,

1.82975288e-02, -2.25220341e-02, -2.50900462e-02,

-2.93726847e-02, -1.54160634e-02, -1.31359138e-02,

-4.18730685e-03, 7.02439900e-03],

[ 0.00000000e+00, 0.00000000e+00, 2.33091041e-01,

2.16639012e-01, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 0.00000000e+00, 3.60264517e-02,

0.00000000e+00, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 0.00000000e+00, 0.00000000e+00,

2.43452594e-01, 0.00000000e+00, 1.48931175e-01,

0.00000000e+00, 1.69527099e-01, 0.00000000e+00,

0.00000000e+00, 2.11448371e-01, 2.79685080e-01,

0.00000000e+00, 0.00000000e+00, 3.19585860e-01,

0.00000000e+00, 2.02429682e-01, 0.00000000e+00,

1.34652272e-01, 0.00000000e+00, 0.00000000e+00,

2.99382299e-01, 0.00000000e+00, 0.00000000e+00,

1.69278979e-01, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 0.00000000e+00, 3.89442369e-02,

0.00000000e+00, 3.50016952e-01, 0.00000000e+00,

6.85007647e-02, 1.43442661e-01, 2.66930610e-01,

0.00000000e+00, 0.00000000e+00, 1.88728899e-01,

6.13208488e-03, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 1.49810567e-01, 0.00000000e+00,

3.47220749e-01, 1.57068416e-01, 0.00000000e+00,

0.00000000e+00, 1.88575819e-01, 9.15735289e-02,

0.00000000e+00, -6.22908436e-02, -3.38319782e-03,

1.23800792e-01, 5.01863398e-02, -4.81331954e-03,

-2.04637516e-02, -4.44554165e-03, -1.80508029e-02,

-1.20663578e-02, 2.83019822e-02],

[ 1.51300639e-01, 2.16012716e-01, 4.31782342e-02,

2.45120600e-01, 1.40066464e-02, 0.00000000e+00,

1.38437048e-01, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 0.00000000e+00, 1.02661684e-01,

0.00000000e+00, 1.62780583e-01, 5.45618273e-02,

3.03599149e-01, 4.95723397e-01, 3.50861490e-01,

1.02114871e-01, 0.00000000e+00, 2.14776307e-01,

2.14072213e-01, 1.56319469e-01, 0.00000000e+00,

1.48854032e-01, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 1.58857539e-01, 0.00000000e+00,

4.05653536e-01, 1.22675776e-01, 4.25197184e-02,

1.12757748e-02, 2.18971923e-01, 0.00000000e+00,

0.00000000e+00, 0.00000000e+00, 2.00662389e-01,

0.00000000e+00, 6.85178116e-03, 0.00000000e+00,

0.00000000e+00, 0.00000000e+00, 0.00000000e+00,

2.33341128e-01, 3.21402609e-01, 0.00000000e+00,

0.00000000e+00, 3.84907007e-01, 0.00000000e+00,

0.00000000e+00, 1.16290517e-01, 0.00000000e+00,

0.00000000e+00, 2.52411574e-01, 2.16994107e-01,

5.22157736e-02, 2.45120507e-02, 4.00505736e-02,

-4.24136184e-02, -2.03966000e-03, 1.33487843e-02,

-2.18517566e-03, -1.06863603e-02, -2.89479154e-03,

-2.85907798e-02, -3.10467603e-03]], dtype=float32)>

Then, we project the learned interaction using a series of dense layers:

deep_dlrm_interaction = dlrm_interaction.connect(mm.MLPBlock([64, 128, 512]))

deep_dlrm_interaction(batch)

<tf.Tensor: shape=(5, 512), dtype=float32, numpy=

array([[0.055957 , 0.0198308 , 0. , ..., 0.01130124, 0. ,

0. ],

[0.0727547 , 0.04420617, 0.03328843, ..., 0.03163406, 0. ,

0. ],

[0.03016799, 0.05012683, 0.01152872, ..., 0.03033926, 0. ,

0. ],

[0.00409173, 0.0635931 , 0. , ..., 0.0143235 , 0. ,

0. ],

[0. , 0.01937133, 0. , ..., 0. , 0. ,

0. ]], dtype=float32)>

Define the Prediction block#

At this stage, we have created the DLRM block that accepts a dictionary of categorical and continuous tensors as input. The output of this block is the interaction representation vector of shape 512. The next step is to use this hidden representation to conduct a given prediction task. In our case, we use the label rating_binary and the objective is: to predict if a user A will give a high rating to a movie B or not.

We use the BinaryClassificationTask class and evaluate the performances using the AUC metric. We also use the LogitsTemperatureScaler block as a pre-transformation operation that scales the logits returned by the task before computing the loss and metrics:

from merlin.models.tf.transforms.bias import LogitsTemperatureScaler

binary_task = mm.BinaryClassificationTask(

sub_schema,

pre=LogitsTemperatureScaler(temperature=2),

)

Define, train, and evaluate the final DLRM Model#

We connect the deep DLRM interaction to the binary task and the method automatically generates the Model class for us.

We note that the Model class inherits from tf.keras.Model class:

model = mm.Model(deep_dlrm_interaction, binary_task)

type(model)

merlin.models.tf.models.base.Model

We train the model using the built-in tf.keras fit method:

model.compile(optimizer="adam", metrics=[tf.keras.metrics.AUC()])

model.fit(train, batch_size=1024, epochs=1)

782/782 [==============================] - 20s 16ms/step - loss: 0.6475 - auc: 0.7244 - regularization_loss: 0.0000e+00

<keras.callbacks.History at 0x7faa133ecd00>

Let’s check out the model evaluation scores:

metrics = model.evaluate(valid, batch_size=1024, return_dict=True)

metrics

196/196 [==============================] - 3s 9ms/step - loss: 0.6390 - auc: 0.7241 - regularization_loss: 0.0000e+00

{'loss': 0.6389783620834351,

'auc': 0.7241206169128418,

'regularization_loss': 0.0}

Note that the evaluate() progress bar shows the loss score for every batch, whereas the final loss stored in the dictionary represents the total loss across all batches.

Save the model so we can use it for serving predictions in production or for resuming training with new observations:

model.save(os.path.join(DATA_FOLDER, "custom_dlrm"))

INFO:tensorflow:Unsupported signature for serialization: ((PredictionOutput(predictions=TensorSpec(shape=(None, 1), dtype=tf.float32, name='outputs/predictions'), targets=TensorSpec(shape=(None, 1), dtype=tf.float32, name='outputs/targets'), positive_item_ids=None, label_relevant_counts=None, valid_negatives_mask=None, negative_item_ids=None, sample_weight=None), <tensorflow.python.framework.func_graph.UnknownArgument object at 0x7faa33dea910>), {}).

INFO:tensorflow:Unsupported signature for serialization: ((PredictionOutput(predictions=TensorSpec(shape=(None, 1), dtype=tf.float32, name='outputs/predictions'), targets=TensorSpec(shape=(None, 1), dtype=tf.float32, name='outputs/targets'), positive_item_ids=None, label_relevant_counts=None, valid_negatives_mask=None, negative_item_ids=None, sample_weight=None), <tensorflow.python.framework.func_graph.UnknownArgument object at 0x7faa33dea910>), {}).

WARNING:absl:Function `_wrapped_model` contains input name(s) TE_age_rating, TE_gender_rating, TE_movieId_rating, TE_occupation_rating, TE_userId_rating, TE_zipcode_rating, movieId, userId with unsupported characters which will be renamed to te_age_rating, te_gender_rating, te_movieid_rating, te_occupation_rating, te_userid_rating, te_zipcode_rating, movieid, userid in the SavedModel.

INFO:tensorflow:Unsupported signature for serialization: ((PredictionOutput(predictions=TensorSpec(shape=(None, 1), dtype=tf.float32, name='outputs/predictions'), targets=TensorSpec(shape=(None, 1), dtype=tf.float32, name='outputs/targets'), positive_item_ids=None, label_relevant_counts=None, valid_negatives_mask=None, negative_item_ids=None, sample_weight=None), <tensorflow.python.framework.func_graph.UnknownArgument object at 0x7faa33dea910>), {}).

INFO:tensorflow:Unsupported signature for serialization: ((PredictionOutput(predictions=TensorSpec(shape=(None, 1), dtype=tf.float32, name='outputs/predictions'), targets=TensorSpec(shape=(None, 1), dtype=tf.float32, name='outputs/targets'), positive_item_ids=None, label_relevant_counts=None, valid_negatives_mask=None, negative_item_ids=None, sample_weight=None), <tensorflow.python.framework.func_graph.UnknownArgument object at 0x7faa33dea910>), {}).

WARNING:absl:Found untraced functions such as train_compute_metrics, model_context_layer_call_fn, model_context_layer_call_and_return_conditional_losses, logits_temperature_scaler_layer_call_fn, logits_temperature_scaler_layer_call_and_return_conditional_losses while saving (showing 5 of 67). These functions will not be directly callable after loading.

INFO:tensorflow:Assets written to: workspace/data/custom_dlrm/assets

INFO:tensorflow:Assets written to: workspace/data/custom_dlrm/assets

Conclusion#

Merlin Models provides common and state-of-the-art RecSys architectures in a high-level API as well as all the required low-level building blocks for you to create your own architecture (input blocks, MLP layers, prediction tasks, loss functions, etc.). In this example, we explored a subset of these pre-existing blocks to create the DLRM model, but you can view our documentation to discover more. You can also contribute to the library by submitting new RecSys architectures and custom building Blocks.

Next steps#

To learn more about how to deploy the trained DLRM model, please visit Merlin Systems library and execute the Serving-Ranking-Models-With-Merlin-Systems.ipynb notebook that deploys an ensemble of a NVTabular Workflow and a trained model from Merlin Models to Triton Inference Server.