# Copyright 2021 NVIDIA Corporation. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ================================

Two-Stage Recommender Systems

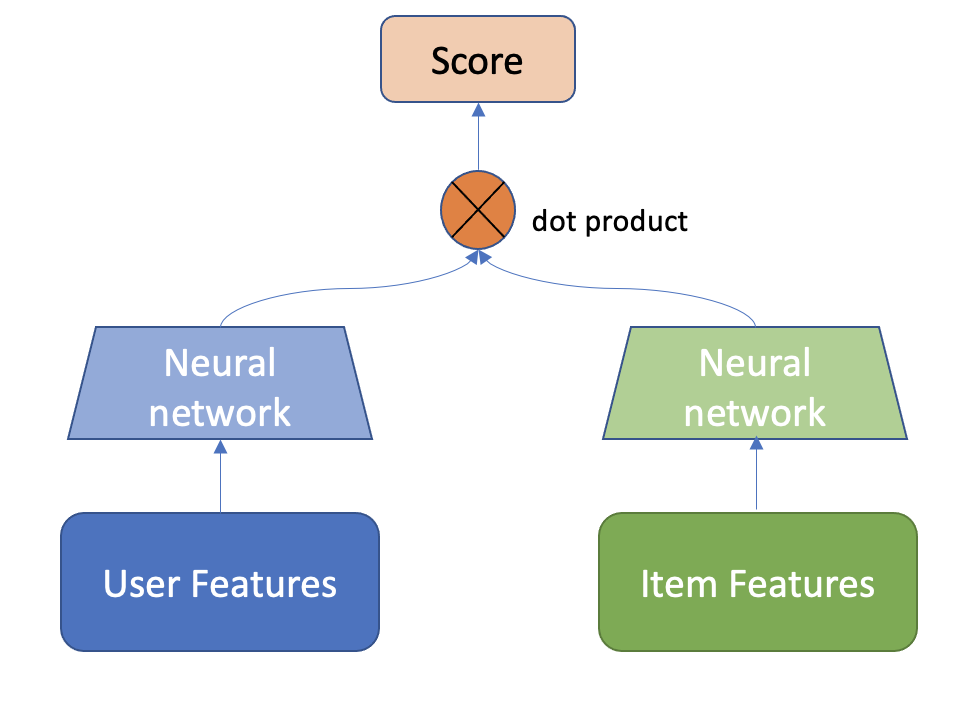

In large scale recommender systems pipelines, the size of the item catalog (number of unique items) might be in the order of millions. At such scale, a typical setup is having two-stage pipeline, where a faster candidate retrieval model quickly extracts thousands of relevant items and a then a more powerful ranking model (i.e. with more features and more powerful architecture) ranks the top-k items that are going to be displayed to the user. For ML-based candidate retrieval model, as it needs to quickly score millions of items for a given user, a popular choices are models that can produce recommendation scores by just computing the dot product the user embeddings and item embeddings. Popular choices of such models are Matrix Factorization, which learns low-rank user and item embeddings, and the Two-Tower architecture, which is a neural network with two MLP towers where both user and item features are fed to generate user and item embeddings in the output.

Dataset

In this notebook, we are building a Two-Tower model for Item Retrieval task using synthetic datasets that are mimicking the real Ali-CCP: Alibaba Click and Conversion Prediction dataset.

Learning objectives

Preparing the data with NVTabular

Training and evaluating Two-Tower model with Merlin Models

Exporting the model for deployment

Importing Libraries

import os

import nvtabular as nvt

from nvtabular.ops import *

from merlin.models.utils.example_utils import workflow_fit_transform

from merlin.schema.tags import Tags

import merlin.models.tf as mm

from merlin.io.dataset import Dataset

import tensorflow as tf

2022-04-21 10:15:45.581013: I tensorflow/core/platform/cpu_feature_guard.cc:152] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: SSE3 SSE4.1 SSE4.2 AVX

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2022-04-21 10:15:47.757897: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1525] Created device /job:localhost/replica:0/task:0/device:GPU:0 with 16255 MB memory: -> device: 0, name: Tesla V100-SXM2-32GB-LS, pci bus id: 0000:8a:00.0, compute capability: 7.0

# disable INFO and DEBUG logging everywhere

import logging

logging.disable(logging.WARNING)

Feature Engineering with NVTabular

Let’s generate synthetic train and validation dataset objects.

from merlin.datasets.synthetic import generate_data

DATA_FOLDER = os.environ.get("DATA_FOLDER", "/workspace/data/")

NUM_ROWS = os.environ.get("NUM_ROWS", 1000000)

train, valid = generate_data("aliccp-raw", int(NUM_ROWS), set_sizes=(0.7, 0.3))

# define output path for the processed parquet files

output_path = os.path.join(DATA_FOLDER, "processed")

We keep only positive interactions where clicks==1 in the dataset with Filter() op.

user_id = ["user_id"] >> Categorify() >> TagAsUserID()

item_id = ["item_id"] >> Categorify() >> TagAsItemID()

item_features = ["item_category", "item_shop", "item_brand"] >> Categorify() >> TagAsItemFeatures()

user_features = ['user_shops', 'user_profile', 'user_group',

'user_gender', 'user_age', 'user_consumption_2', 'user_is_occupied',

'user_geography', 'user_intentions', 'user_brands', 'user_categories'] \

>> Categorify() >> TagAsUserFeatures()

inputs = user_id + item_id + item_features + user_features + ['click']

outputs = inputs >> Filter(f=lambda df: df["click"] == 1)

With transform_aliccp function, we can execute fit() and transform() on the raw dataset applying the operators defined in the NVTabular workflow pipeline above. The processed parquet files are saved to output_path.

from merlin.datasets.ecommerce import transform_aliccp

transform_aliccp((train, valid), output_path, nvt_workflow=outputs)

/usr/local/lib/python3.8/dist-packages/cudf/core/dataframe.py:1292: UserWarning: The deep parameter is ignored and is only included for pandas compatibility.

warnings.warn(

Building a Two-Tower Model with Merlin Models

We will use Two-Tower Model for item retrieval task. Real-world large scale recommender systems have hundreds of millions of items (products) and users. Thus, these systems often composed of two stages: candidate generation (retrieval) and ranking (scoring the retrieved items). At candidate generation step, a subset of relevant items from large item corpus is retrieved. You can read more about two stage Recommender Systems here. In this example, we’re going to focus on the retrieval stage.

A Two-Tower Model consists of item (candidate) and user (query) encoder towers. With two towers, the model can learn representations (embeddings) for queries and candidates separately.

Image Adapted from: Off-policy Learning in Two-stage Recommender Systems

We use the schema object to define our model.

output_path

'/workspace/data/processed'

train = Dataset(os.path.join(output_path, 'train', '*.parquet'))

valid = Dataset(os.path.join(output_path, 'valid', '*.parquet'))

schema = train.schema

schema = schema.select_by_tag([Tags.ITEM_ID, Tags.USER_ID, Tags.ITEM, Tags.USER])

We can print out the feature column names.

schema.column_names

['user_id',

'item_id',

'item_category',

'item_shop',

'item_brand',

'user_shops',

'user_profile',

'user_group',

'user_gender',

'user_age',

'user_consumption_2',

'user_is_occupied',

'user_geography',

'user_intentions',

'user_brands',

'user_categories']

We expect the label names to be empty.

label_names = schema.select_by_tag(Tags.TARGET).column_names

label_names

[]

Negative sampling

Many datasets for recommender systems contain implicit feedback with logs of user interactions like clicks, add-to-cart, purchases, music listening events, rather than explicit ratings that reflects user preferences over items. To be able to learn from implicit feedback, we use the general (and naive) assumption that the interacted items are more relevant for the user than the non-interacted ones. In Merlin Models we provide some scalable negative sampling algorithms for the Item Retrieval Task. In particular, we use in this example the in-batch sampling algorithm which uses the items interacted by other users as negatives within the same mini-batch.

Building the Model

Now, let’s build our Two-Tower model. In a nutshell, we aggregate all user features to feed in user tower and feed the item features to the item tower. Then we compute the positive score by multiplying the user embedding with the item embedding and sample negative items (read more about negative sampling here and here), whose item embeddings are also multiplied by the user embedding. Then we apply the loss function on top of the positive and negative scores.

model = mm.TwoTowerModel(

schema,

query_tower=mm.MLPBlock([128, 64], no_activation_last_layer=True),

loss="categorical_crossentropy",

samplers=[mm.InBatchSampler()],

embedding_options = mm.EmbeddingOptions(infer_embedding_sizes=True),

metrics=[mm.RecallAt(10), mm.NDCGAt(10)]

)

Let’s explain the parameters in the TwoTowerModel():

no_activation_last_layer: when set True, no activation is used for top hidden layer. Learn more here.

infer_embedding_sizes: when set True, automatically defines the embedding dimension from the feature cardinality in the schema

Metrics:

The following information retrieval metrics are used to compute the Top-10 accuracy of recommendation lists containing all items:

Normalized Discounted Cumulative Gain (NDCG@10): NDCG accounts for rank of the relevant item in the recommendation list and is a more fine-grained metric than HR, which only verifies whether the relevant item is among the top-k items.

Recall@10: Also known as HitRate@n when there is only one relevant item in the recommendation list. Recall just verifies whether the relevant item is among the top-n items.

We need to initialize the dataloaders.

model.compile(optimizer='adam', run_eagerly=False)

model.fit(train, validation_data=valid, batch_size=4096, epochs=3)

2022-04-21 10:15:57.763520: W tensorflow/python/util/util.cc:368] Sets are not currently considered sequences, but this may change in the future, so consider avoiding using them.

Epoch 1/3

84/86 [============================>.] - ETA: 0s - recall_at_10: 0.0062 - ndcg_10: 0.0036 - loss: 8.3027 - regularization_loss: 0.0000e+00 - total_loss: 8.3027

2022-04-21 10:16:15.071222: W tensorflow/core/grappler/optimizers/loop_optimizer.cc:907] Skipping loop optimization for Merge node with control input: cond/branch_executed/_23

86/86 [==============================] - 16s 44ms/step - recall_at_10: 0.0062 - ndcg_10: 0.0036 - loss: 8.2675 - regularization_loss: 0.0000e+00 - total_loss: 8.2675 - val_recall_at_10: 0.0062 - val_ndcg_10: 0.0033 - val_loss: 7.9288 - val_regularization_loss: 0.0000e+00 - val_total_loss: 7.9288

Epoch 2/3

86/86 [==============================] - 2s 23ms/step - recall_at_10: 0.0087 - ndcg_10: 0.0047 - loss: 8.2635 - regularization_loss: 0.0000e+00 - total_loss: 8.2635 - val_recall_at_10: 0.0076 - val_ndcg_10: 0.0041 - val_loss: 7.9288 - val_regularization_loss: 0.0000e+00 - val_total_loss: 7.9288

Epoch 3/3

86/86 [==============================] - 2s 23ms/step - recall_at_10: 0.0117 - ndcg_10: 0.0068 - loss: 8.2624 - regularization_loss: 0.0000e+00 - total_loss: 8.2624 - val_recall_at_10: 0.0084 - val_ndcg_10: 0.0045 - val_loss: 7.9306 - val_regularization_loss: 0.0000e+00 - val_total_loss: 7.9306

<keras.callbacks.History at 0x7fa13ea8ebb0>

Exporting Retrieval Models

So far we have trained and evaluated our Retrieval model. Now, the next step is to deploy our model and generate top-K recommendations given a user (query). We can efficiently serve our model by indexing the trained item embeddings into an Approximate Nearest Neighbors (ANN) engine. Basically, for a given user query vector, that is generated passing the user features into user tower of retrieval model, we do an ANN search query to find the ids of nearby item vectors, and at serve time, we score user embeddings over all indexed top-K item embeddings within the ANN engine.

In doing so, we need to export

user (query) tower

item and user features

item embeddings

Save User (query) tower

We are able to save the user tower model as a TF model to disk. The user tower model is needed to generate a user embedding vector when a user feature vector x is fed into that model.

query_tower = model.retrieval_block.query_block()

query_tower.save('query_tower')

Extract and save User features

With unique_rows_by_features utility function we can easily extract both unique user and item features tables as cuDF dataframes. Note that for user features table, we use USER and USER_ID tags.

from merlin.models.utils.dataset import unique_rows_by_features

user_features = unique_rows_by_features(train, Tags.USER, Tags.USER_ID).compute().reset_index(drop=True)

user_features.head()

| user_id | user_shops | user_profile | user_group | user_gender | user_age | user_consumption_2 | user_is_occupied | user_geography | user_intentions | user_brands | user_categories | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 1 | 2 | 2 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 2 | 2 | 2 |

| 2 | 3 | 3 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 3 | 3 | 3 |

| 3 | 4 | 4 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 4 | 4 | 4 |

| 4 | 5 | 5 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 5 | 5 | 5 |

user_features.shape

(676, 12)

# save to disk

user_features.to_parquet('user_features.parquet')

Extract and save Item features

item_features = unique_rows_by_features(train, Tags.ITEM, Tags.ITEM_ID).compute().reset_index(drop=True)

item_features.head()

| item_id | item_category | item_shop | item_brand | |

|---|---|---|---|---|

| 0 | 1 | 1 | 1 | 1 |

| 1 | 2 | 2 | 2 | 2 |

| 2 | 3 | 3 | 3 | 3 |

| 3 | 4 | 4 | 4 | 4 |

| 4 | 5 | 5 | 5 | 5 |

# save to disk

item_features.to_parquet('item_features.parquet')

Extract and save Item embeddings

item_embs = model.item_embeddings(Dataset(item_features, schema=schema), batch_size=1024)

item_embs_df = item_embs.compute(scheduler="synchronous")

item_embs_df

| item_id | item_category | item_shop | item_brand | 0 | 1 | 2 | 3 | 4 | 5 | ... | 54 | 55 | 56 | 57 | 58 | 59 | 60 | 61 | 62 | 63 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1 | 1 | 1 | -0.073576 | 0.085595 | -0.180240 | -0.027148 | -0.191017 | -0.104568 | ... | 0.248656 | -0.045637 | 0.076258 | -0.081022 | 0.060888 | 0.162383 | 0.078302 | -0.216183 | -0.144103 | -0.223617 |

| 1 | 2 | 2 | 2 | 2 | 0.106903 | 0.024441 | -0.055793 | -0.141893 | -0.078035 | -0.036643 | ... | 0.071353 | -0.061388 | 0.100870 | -0.176205 | -0.091968 | 0.078213 | 0.111239 | -0.038364 | -0.010783 | -0.153099 |

| 2 | 3 | 3 | 3 | 3 | -0.183815 | 0.066229 | -0.169174 | -0.011347 | -0.293196 | 0.133997 | ... | 0.242444 | -0.164485 | 0.186567 | -0.027846 | 0.047943 | 0.129363 | 0.071678 | -0.315024 | -0.112482 | 0.003846 |

| 3 | 4 | 4 | 4 | 4 | -0.103461 | -0.035693 | -0.137998 | 0.056771 | -0.322394 | 0.082303 | ... | 0.065095 | -0.177354 | 0.051345 | -0.110389 | -0.004062 | 0.077395 | 0.009678 | -0.142832 | -0.128338 | -0.012429 |

| 4 | 5 | 5 | 5 | 5 | 0.117509 | -0.060412 | -0.043300 | -0.058867 | -0.221357 | -0.016098 | ... | 0.112433 | -0.064500 | 0.005503 | 0.029761 | 0.070613 | 0.098602 | 0.075473 | -0.226324 | -0.129227 | -0.049931 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 659 | 761 | 761 | 761 | 761 | -0.034865 | 0.018155 | -0.053817 | -0.031378 | -0.214945 | 0.058257 | ... | 0.144927 | -0.182747 | 0.003142 | -0.076069 | -0.011294 | 0.081166 | 0.273015 | -0.279196 | -0.179816 | 0.047407 |

| 660 | 763 | 763 | 763 | 763 | 0.056317 | -0.063410 | -0.064464 | -0.036865 | 0.015957 | -0.037839 | ... | 0.083606 | 0.073531 | -0.038036 | 0.075931 | 0.037699 | 0.094174 | 0.083045 | -0.163806 | -0.060995 | -0.029095 |

| 661 | 764 | 764 | 764 | 764 | -0.048466 | 0.105521 | -0.041466 | 0.124590 | -0.390372 | 0.002299 | ... | 0.107293 | -0.121716 | 0.035031 | -0.363069 | -0.071770 | -0.120439 | 0.053240 | -0.328661 | -0.090173 | -0.005702 |

| 662 | 766 | 766 | 766 | 766 | -0.101014 | -0.149288 | -0.065700 | -0.066804 | -0.224008 | 0.081384 | ... | -0.055213 | -0.229109 | -0.065813 | -0.020026 | -0.135153 | 0.224883 | 0.089857 | -0.158738 | -0.102280 | 0.047453 |

| 663 | 767 | 767 | 767 | 767 | -0.004216 | -0.052974 | -0.006410 | 0.042287 | -0.022653 | 0.053430 | ... | 0.082339 | -0.107670 | -0.097196 | 0.003726 | -0.051594 | 0.003086 | 0.055449 | -0.004521 | 0.065674 | 0.023406 |

664 rows × 68 columns

# select only embedding columns

item_embeddings = item_embs_df.iloc[:, 4:]

item_embeddings.head()

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ... | 54 | 55 | 56 | 57 | 58 | 59 | 60 | 61 | 62 | 63 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | -0.073576 | 0.085595 | -0.180240 | -0.027148 | -0.191017 | -0.104568 | 0.004146 | -0.170429 | -0.071614 | -0.087105 | ... | 0.248656 | -0.045637 | 0.076258 | -0.081022 | 0.060888 | 0.162383 | 0.078302 | -0.216183 | -0.144103 | -0.223617 |

| 1 | 0.106903 | 0.024441 | -0.055793 | -0.141893 | -0.078035 | -0.036643 | 0.055592 | -0.008832 | -0.137167 | 0.261487 | ... | 0.071353 | -0.061388 | 0.100870 | -0.176205 | -0.091968 | 0.078213 | 0.111239 | -0.038364 | -0.010783 | -0.153099 |

| 2 | -0.183815 | 0.066229 | -0.169174 | -0.011347 | -0.293196 | 0.133997 | -0.136634 | -0.095579 | -0.037962 | 0.049111 | ... | 0.242444 | -0.164485 | 0.186567 | -0.027846 | 0.047943 | 0.129363 | 0.071678 | -0.315024 | -0.112482 | 0.003846 |

| 3 | -0.103461 | -0.035693 | -0.137998 | 0.056771 | -0.322394 | 0.082303 | 0.053787 | 0.075843 | -0.042746 | -0.175128 | ... | 0.065095 | -0.177354 | 0.051345 | -0.110389 | -0.004062 | 0.077395 | 0.009678 | -0.142832 | -0.128338 | -0.012429 |

| 4 | 0.117509 | -0.060412 | -0.043300 | -0.058867 | -0.221357 | -0.016098 | 0.026822 | -0.036691 | -0.077781 | -0.048962 | ... | 0.112433 | -0.064500 | 0.005503 | 0.029761 | 0.070613 | 0.098602 | 0.075473 | -0.226324 | -0.129227 | -0.049931 |

5 rows × 64 columns

# save to disk

item_embeddings.to_parquet('item_embeddings.parquet')

That’s it. You have learned how to train and evaluate your Two-Tower retrieval model, and then how to export the required components to be able to deploy this model to generate recommendations. In order to learn more on serving a model to Triton Inference Server, please explore the examples in the Merlin and Merlin Systems repos.